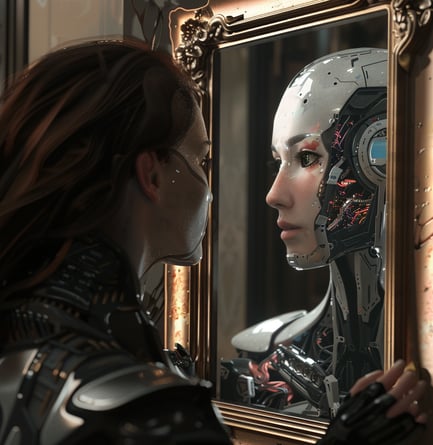

The Dangers of AI in Cybersecurity: A Deep Dive into Malicious Uses

In the realm of cybersecurity, Artificial Intelligence (AI) stands as a beacon of progress, offering innovative solutions to complex challenges. However, like any powerful tool, AI can be exploited for malicious purposes, leading to a new breed of cyber threats that are more sophisticated and difficult to detect than ever before. This webpage delves into the dangers of AI in cybersecurity, exploring how AI can be used in various internet scams, voice cloning, and AI-generated videos to deceive, manipulate, and harm unsuspecting

users.

Internet Scams: Evolution through AI

AI has revolutionized internet scams, making them more personalized and harder to recognize. Machine learning algorithms can analyze vast amounts of data to identify potential targets, understand their habits, and craft convincing messages that mimic genuine communications. These scams can take many forms, including:

Phishing Attacks: Utilizing AI to generate emails that closely mimic those from reputable sources, tricking recipients into giving away sensitive information.

Social Engineering Scams: AI can help scammers analyze social media activity and personal data to create highly convincing fake profiles or messages, tricking users into fraudulent transactions or revealing personal details.

Voice Cloning: The Power of Persuasion

Voice cloning technology has reached a point where it can produce almost indistinguishable copies of a person's voice with minimal samples. This advancement opens up a Pandora's box of potential misuse:

Impersonation: Malicious actors can clone the voices of trusted individuals to commit fraud, such as unauthorized bank transactions or accessing secure systems.

Fake Endorsements: Cloned voices of public figures or celebrities can be used to spread false information or endorse products and scams.

AI-Generated Videos: Seeing Isn't Believing

AI-generated videos, or deepfakes, present a significant threat to the integrity of information and personal reputation. With this technology, it's possible to:

Manipulate Public Opinion: By creating realistic videos of public figures saying or doing things they never did, attackers can manipulate elections, stock markets, or public opinion.

Blackmail and Disinformation: Individuals can be targeted with fabricated videos to extort, embarrass, or spread misinformation, damaging reputations and lives.

To combat these AI-powered cybersecurity threats, it's essential to adopt a multi-layered approach:

Education and Training: Raise awareness about the capabilities and dangers of AI in cyber threats. Training on recognizing AI-generated scams is crucial.

Advanced Detection Tools: Utilize AI-powered security tools that can detect and neutralize AI-generated threats, including advanced phishing attempts and deepfakes.

Verification Processes: Implement strict verification processes for sensitive actions, such as financial transactions or accessing secure information, to prevent fraud from voice cloning or AI impersonation.

Regulation and Ethics: Support the development of legal and ethical frameworks that govern the use of AI, ensuring accountability for misuse and promoting the responsible development of AI technologies.

Conclusion

As AI continues to evolve, so too do the methods by which it can be exploited for malicious purposes. The cybersecurity community must remain vigilant, continually advancing our defenses to protect against these emerging threats. By understanding the potential dangers and implementing comprehensive security measures, we can harness the power of AI for good while mitigating the risks it poses in the wrong hands.

Contact

Spence@spencesolutions.tech